A Parallel Volume Rendering System for Scientific Visualization

Objective:

Develop a portable and scalable parallel volume rendering system for the

teraflop supercomputers to support

distributed visualization needs demanded by HPCC Grand Challenge applications

and general science community. The rendering system is capable of visualizing

large volumes of 4-D simulation/modeling data which are beyond that the

existing workstation capability and network bandwidth can handle.

Approach:

A parallel volume rendering Application Programming Interface

(API) based on the splatting algorithm was designed and implemented.

The API renders 3-D scalar and vector

fields in structured grids, in both time and space. An X-window based

GUI with multiple control panels was also designed and implemented for

interactive control of visualization

parameters and trackball control of viewing positions. A network interface was

built in between the GUI and the renderer API that supports image compression

over low-speed network and high-resolution displays such as HiPPI framebuffer

and Powerwall over high-speed network. All together, the distributed volume

rendering system is called the ParVox (Parallel Voxel Renderer).

Accomplishments:

The ParVox was first demonstrated in October 1997.

In FY'97, we continue the development and implementation of

the ParVox system. The major accomplishments include:

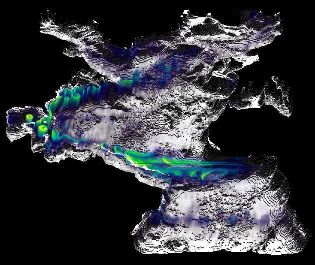

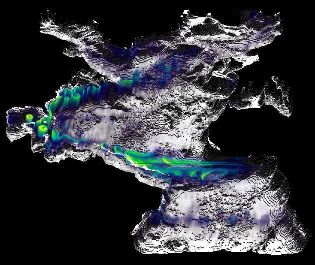

- Vector visualization -- we have tried several ways for visualizing

vectors, including Line Integral Convolution (LIC) and magnitudes.

Both methods convert vectors into scalars, then using scalar

rendering to look at the data. We found out LIC is not a very

intuitive way to visualize 3-D volumes; it is more effective if the

vectors can be mapped to a predefined surface. We also found out that

magnitude is a good representation for certain datasets. By

adjusting the voxel opacity based on the magnitude values, we are able to

visualize the eddy-resolving at Atlantic Ocean in the ocean velocity

datasets from the

Ocean Climate Modelling Project. The data was provided

Yi Chao, using the Parallel Ocean Program (POP).

A five-year ocean circulation animation was generated using

ParVox on the JPL Cray T3D. One still image from the animation is

shown in Figure 1.

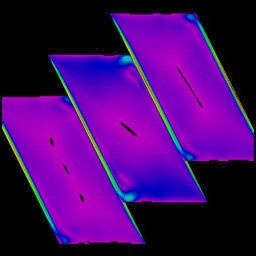

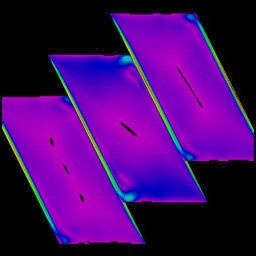

- Slice visualization -- Plotting 2-D slices is a common way to look at

3-D datasets. We added a new feature in ParVox to enable

visualization of multiple slices along three major axes. A

new control panel was built in GUI to allow users to define up to 10

slices with designated opacities. A multiple-slice image rendered

using data from a 3D Thermal Convective Flow Model is shown in Figure 2. Data was provided by

Ping Wang.

- Color and opacity editing -- We integrated a color editing software,

Icol,

developed by Army High Performance Computing Research Center

(AHPCRC), in the ParVox GUI. It is a useful tool for interactive

editing and sketching color maps and opacity maps that are

interpolated between key points.

In addition, we wrote a technical paper,

ParVox -- A Parallel Splatting Volume Rendering System for

Distributed Visualization. This paper will be published in

the IEEE

Proceedings of Parallel Rendering Symposium, October 1997. A ParVox

home page will be available in the near future.

Significance:

The demand for parallel supercomputer in interactive scientific visualization

is increasing as the ability of the machines to produce large output datasets

has dramatically increased. The ParVox system provides a solution for

distributive visualization of large time-varying datasets on scientist's

desktop using low-speed network and low-end workstations.

Status/Plans:

The major plan for FY'98 is to port ParVox to MPI2.0. ParVox uses

asynchronous one-way communication routines in Cray's shmem library to

interleave the rendering and compositing, thus hiding the

communication overhead and improving the parallel algorithm's

efficiency. The MPI2.0 specification, announced in July '97, includes

a one-way communication API that is very similar to Cray's shmem

library. To minimize the porting effort and optimize the memory

access performance of the shared-memory MPP, which is becoming the

dominant architecture for the parallel supercomputers, we decide to

port ParVox to the MPI2.0. Currently, none of the supercomputer

vendors are supporting MPI2.0 yet, but we expect it will become available

soon. The second plan for the FY'98 is to provide functional

pipelining by separating the compression module from the rendering

module and building interface between the application program and the

ParVox. A fully pipelined ParVox distributed visualization system is

illustrated in Figure 3.

Point of Contact:

P. Peggy Li

Jet Propulsion Laboratory

(818)354-1341

peggy@spartan.jpl.nasa.gov